Department of Computer Science

Technical University of Cluj-Napoca

Introduction to Artificial Intelligence

Adrian Groza, Radu Razvan Slavescu and Anca Marginean

UTPRESS

Cluj-Napoca, 2018

ISBN 978-606-737-290-8

Contents

1 Problem-solving agents 5

1.1 Python programming language . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.2 Pac-Man framework . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2 Uninformed search 10

2.1 Let’s find the food-dot. Search problem . . . . . . . . . . . . . . . . . . . . . . 10

2.2 Random search agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.3 Tree seach and graph search . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.4 Depth first, breadth first and uniform costs search strategies . . . . . . . . . . . 13

2.5 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3 Informed search 19

3.1 A* algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.2 Admissible and consistent heuristics . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3 Finding all corners. Eating all food . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

4 Adversarial search 24

4.1 Reflex agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

4.2 Minimax algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.3 Alpha-beta prunning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

5 Propositional logic 31

5.1 Getting started with Prover9 and Mace4 . . . . . . . . . . . . . . . . . . . . . . 31

5.2 First example: Socrates is mortal . . . . . . . . . . . . . . . . . . . . . . . . . . 31

5.3 A more complex example: FDR goes to war . . . . . . . . . . . . . . . . . . . . 34

5.4 Wumpus world in Propositional Logic . . . . . . . . . . . . . . . . . . . . . . . . 35

5.5 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

6 Models in propositional logic 38

6.1 Formalizing puzzles: Princesses and tigers . . . . . . . . . . . . . . . . . . . . . 38

6.2 Finding more models: graph coloring . . . . . . . . . . . . . . . . . . . . . . . . 41

6.3 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

7 First Order Logic 46

7.1 First Example: Socrates in First Order Logic . . . . . . . . . . . . . . . . . . . . 46

7.2 FDR goes to war in FOL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

7.3 Alligator and Beer: finding models in FOL . . . . . . . . . . . . . . . . . . . . . 50

7.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2

8 Inference in First-Order Logic 53

8.1 Revisiting resolution and skolemization . . . . . . . . . . . . . . . . . . . . . . . 53

8.2 Paramodulation and demodulation . . . . . . . . . . . . . . . . . . . . . . . . . 54

8.3 Let’s find the Wumpus! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

8.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

9 Constraint satisfaction problems 60

9.1 Solving CSP by consistency checking . . . . . . . . . . . . . . . . . . . . . . . . 60

9.2 Solving CSP with stochastic local search . . . . . . . . . . . . . . . . . . . . . . 63

9.3 Solving CSP with satisfiability solvers . . . . . . . . . . . . . . . . . . . . . . . . 65

9.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

10 Classical planning 71

10.1 Planning domains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

10.2 Planning Domain Definition Language . . . . . . . . . . . . . . . . . . . . . . . 74

10.3 Fast Downward planner . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

10.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

11 Heuristics for planning 79

11.1 Defining heuristics by relaxation . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

11.2 Search engines and heuristics in the Fast Downward planner . . . . . . . . . . . 81

11.3 Introducing costs to actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

11.4 Modelling planning domains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

11.5 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

12 Planning and acting in the real world 89

12.1 Planning domains with uncertainty . . . . . . . . . . . . . . . . . . . . . . . . . 89

12.2 Conformant planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

12.3 Contingent planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

12.4 Solution to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

13 Knowledge representation with event calculus 95

13.1 Discrete Event Calculus reasoner . . . . . . . . . . . . . . . . . . . . . . . . . . 95

13.2 Reasoning modes in event calculus . . . . . . . . . . . . . . . . . . . . . . . . . 96

13.3 Carying a newspaper example . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

13.4 Solutions to exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

A Brief synopsis of Linux 100

B L

A

T

E

X– let’s roll! 109

C Fast and furios tutorial on Python 112

3

Introduction

This tutorial follows the structure of the AIMA textbook. The tutorial proposes exercises for

the first twelve chapters of the textbook and has three parts.

The first part deals with agents that solve problems by searching. Uninformed search

strategies (depth first search, breadth first search, uniform cost search) are compared against

informed search strategies (A* algorithm). The strength of A* algorithm is evidentiated through

various admissible and consistent heuristics. The search strategies are presented through a

game-based framework, that is the Pacman agent. The Pacman game allows students to also

practice adversarial search. This part relies on the search tutorial used at Berkeley http:

//ai.berkeley.edu/project_overview.html. The programming language for this part is

Python, as this language has currently rised as the main platform for artificial intelligence.

The second part focuses on knowledge representation based on propositional and first order

logic. Prover9 theorem prover and Mace4 satisfiability tool are used to illustrate concepts

like: resolution, unsatisfiability, models, or conjuctive normal form. The chapter presenting

constraint satisfaction problems allows students to trace local search algorithms: random walk,

hill climbing, stochastic hill climbing, greedy descendent with random restarts or simmulating

annealling.

The third part deals with planning agents. The Fast Downward planning system is used to

solve classical planning problems. To role of heuristics in planning is stressed through various

experiments and running scenarios. We also consider partial observability and nondeterminism.

To handle these, we need conformant planning. Moreover, ability to observe aspects of the

current state is handled by contingent planning.

The proposed exercises are rather ambitious. Some exercises are straitforward, but others

are open toward stimulating research in the eager students. The rationale was to accomodate

the heterogenity of the learners. Focus is both on programming and on modelling the reality

into a formal representation. Students should be aware that computing interacts with many

different domains. Solutions to many artificial intelligence tasks require both computing skills

and domain knowledge. This laboratory best serves as a test to see whether you have at the

moment the potential to propose solutions to realistic scenarios and validate your solutions

with a running prototype. The assignments are designed to provide you with an insight of

the research methodology by developing your critical thinking, enhancing your ability to work

independently and develop your technical writing. Side effects of completing this tutorial

include the pleasure of discovering the strength of Linux for scientific experiments and also the

most powerful framework for scientific editing, that is Latex.

Chapters 1-4 and the appendix on Python are written by Anca Marginean. Chapters 5-8

and the appendix on Linux and LaTex are written by Radu Razvan Slavescu. Chapter 9 is a

joint work between Radu Razvan Slavescu and Adrian Groza. Chapters 10-13 are written by

Adrian Groza.

4

Chapter 1

Problem-solving agents

Learning objectives for this week are:

1. To get used to or recall Python programming language

2. To run Pacman framework

1.1 Python programming language

The first four laboratory works use a framework developed in Python. You need basic skills of

writing Python. Anexa C is a brief overview of the main elements you will use. Either you are

a Python guru, or a Python beginner, read it and do the exercises.

1.2 Pac-Man framework

The Pac-Man projects were developed for UC Berkeley’s introductory artificial intelligence

course. They were released to other universities for education use http://ai.berkeley.edu/

project_overview.html. Search, Adversarial search, Reinforcement learning, Probabilistic

inference with hidden Markov model, Machine learning with Naive Bayes or Perceptron are

some AI techniques applied in the projects. During this semester you will study the first two.

Pac-man is a game with more entities: Pacman, ghosts, food-dots and power-pellets. The

player controls Pacman in its quest of eating all the food-dots. Pacman dies if it eaten by a

ghost, but if Pacman eats a power-pellet, it wil have temporary ability to eat also the ghosts.

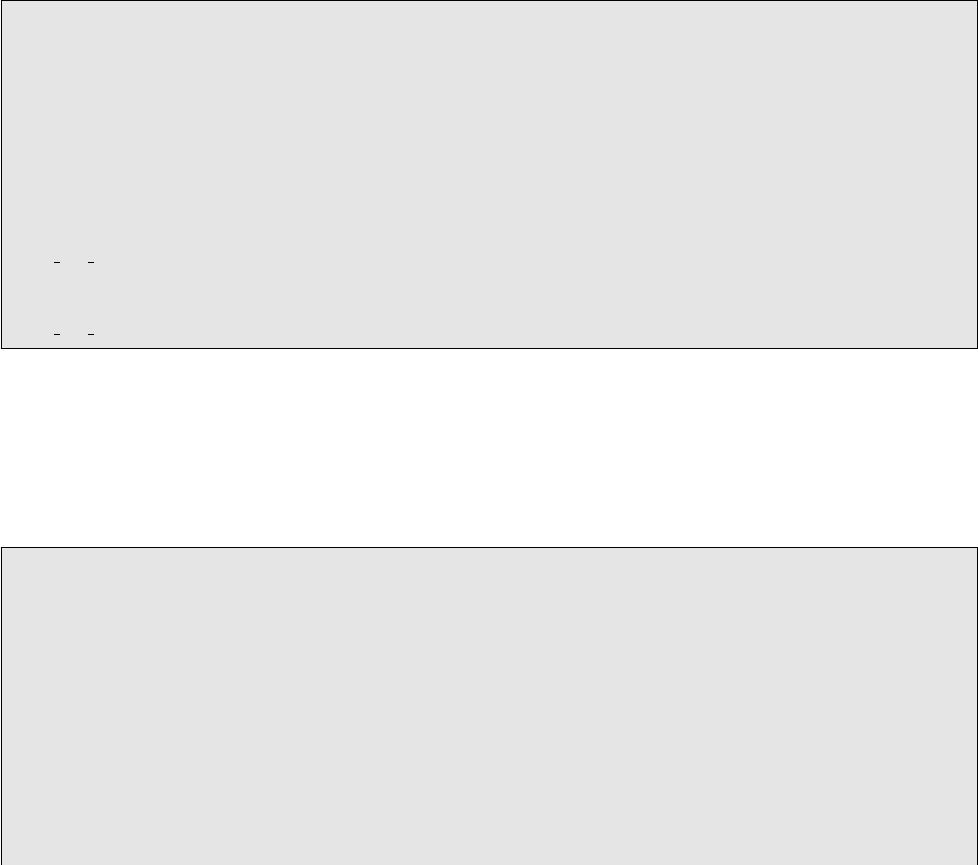

Our aim is to build agents that control Pacman and win. Possible actions are North, South,

East, West, Stop, depending on the presence of walls (see figure 1.1). With each step, Pacman

looses 1 point, at each food-dot eaten it gets 10 points, on finishing the game it get 500 points.

Figure 1.1: Legal actions for Pacman when there is a wall

1 2 3 4

x

1

2

3

y

P

North

EastWest

Legal actions

5

Figure 1.2: Run configuration for: python pacman.py -l smallMaze -p SearchAgent

For the study of search problems and agents a simpler version of Pacman is used where the

ghosts are not present. Download the code from https://s3-us-west-2.amazonaws.com/

cs188websitecontent/projects/release/search/v1/001/search.zip and extract it into

your own folder.

You can play a game of Pacman by running the script pacman.py:

1 python pacman . py

You can see the available options for the script pacman.py with:

1 python pacman . py -h

The layout must be specified with option −l or − − layout. The agent is specified with

−p or − − pacman. The layouts are defined in the Layout folder. The agents are defined in

searchAgents.py file.

Using PyCharm

You can use any text editor for editing the files, or if you prefer working in an IDE, you can

use Pycharm.

Possible steps in using PyCharm:

1. Start PyCharm and create a new project with the location in the folder search and with

python 2.7 as project interpreter.

2. Run pacman.py: Right click on pacman.py and run. This will create a default Run

Configuration. You can run the current configuration with SHIFT+F10.

3. You can change or add new Run configurations from the menu Run >> Edit configurations

or from the Select Run Configuration drop-down list in the right upper corner (see figure

1.2).

1 $ pycharm . sh

2 File >> New Project > > Pure Python

3 < choose search folder from your own folder for Location >

4 < select Python 2.7 for Interpreter >

5

6 Run >> Edit Conf igu rat ion s >> + >> Choose Python >> Edit the

param eter s : Script , Script parameters , and Working direc tory

6

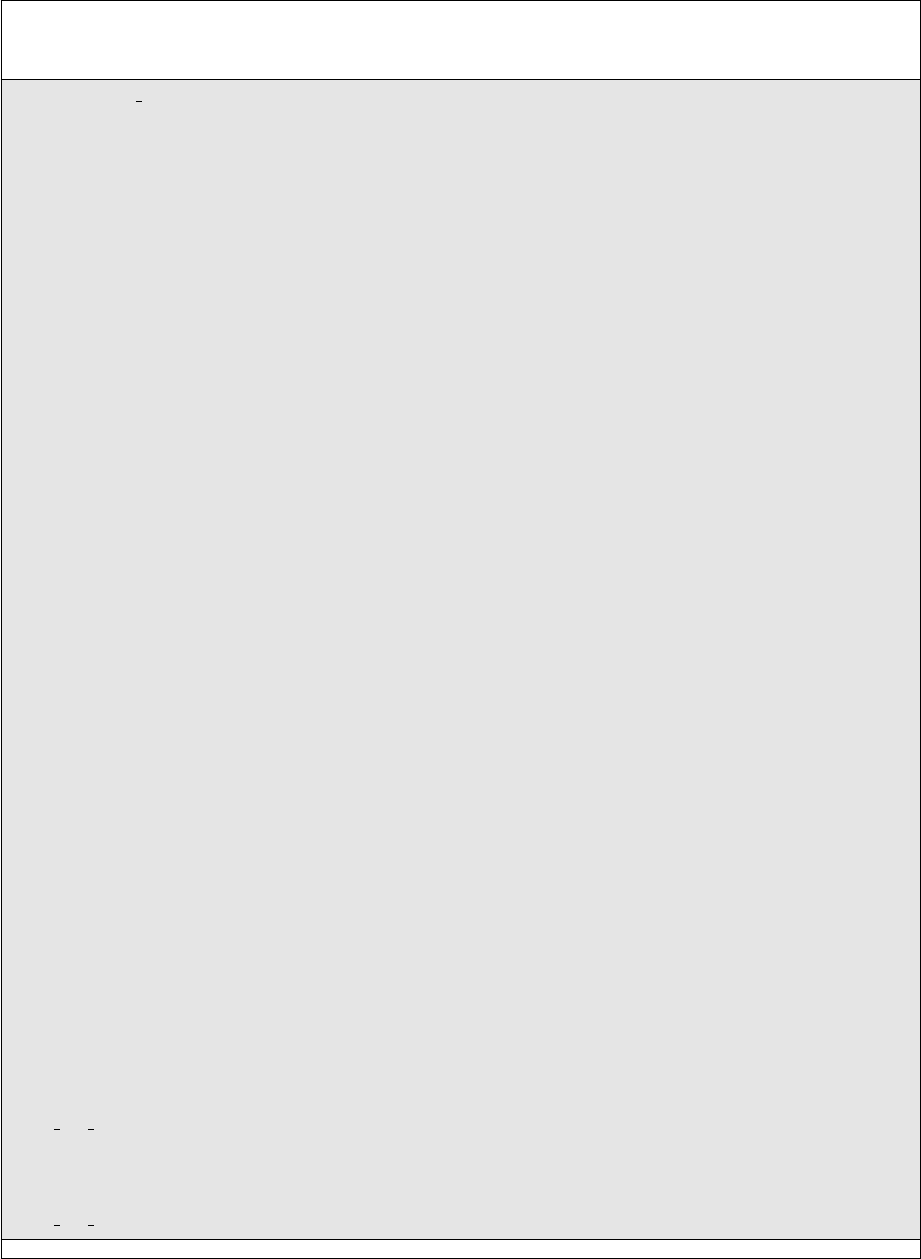

Figure 1.3: Types of search agents from SearchAgens.py.

There are more alternative running ways for Pacman: from a terminal outside PyCharm,

from Pycharm terminal, or with Pycharm Run configurations. In order to open Pycharm

terminal use ALT+F12 or go the bottom-left corner of PyCharm window. For all exercises, the

script is pacman.py, but the parameters will be different, mainly for the layout and the agent.

Since you will run pacman with more options, you should create a Run Configuration for each

combination. The file commands.txt from search folder contains a list of the most common

commands.

Content of search folder

In the extracted folder search there are more files. Each file, class or method includes comments

which are very important for you, mainly for classes or methods which are to be changed. You

have to change only in the special are marked with YOUR CODE HERE.

• Files to be changed

– search.py - description of an abstract class SearchProblem and several functions

where you are supposed to add your code. The main methods of SearchProblem

class are:

∗ getStartState(self) - it returns the initial state

∗ isGoalState(self, state) - it checks whether a state is a goal state and

returns true or false

∗ getSuccessors(self, state) - it takes a state and returns a list of legal suc-

cessors together with the requires actions and cost.

∗ getCostOfActions(self, actions) - it takes a sequence of actions and returns

its cost.

– searchAgents.py - includes the search-based agents, already implemented or ToBe

implemented search problems, and heuristics.

∗ search problem: There are two classes derived from SearchProblem:

· PositionSearchProblem - a search problem in which Pacman needs to find

a certain position or positions. Class AnyFoodSearchProblem is derived

from this and describes the problem of finding all the food.

· CornersProblem - a search problem in which Pacman needs to find all the

corners.

7

∗ agents: There are two important agents defined, a SearchAgent with more

derived classes (figure 1.3), and a very simple reflex agent GoWestAgent.

• Files which include worth reading parts

– pacman.py - the main file for running Pacman games. Read the description of

GameState type which specifies the full game state.

– game.py - the logic behind how the Pacman world works. Important types: AgentState,

Agent, Direction, Grid.

– util.py - data structures which are recommended to be used when implementing

the search algorithms

In order to better understand the structure of the project, you can create class diagrams in

PyCharm (Right click on a file >> Diagrams). Do not forget, you will most likely change only

the files search.py and searchAgents.py.

Exercise 1.1 Analyze the implementation of GoWestAgent from searchAgents.py and run

the agent with the following commands:

1 python pacman . py -l testMaze -p GoWes tAge nt

2 python pacman . py -l tinyMaze -p GoWes tAge nt

Run them from: i) PyCharm terminal; ii) PyCharm Run configuration; iii) system’s terminal.

Does Pacman reach the food from (1,1) for both layouts? Why?

Exercise 1.2 Watch some movies with Pacman helped by search algorithm. At the end of the

first four labs you will be able to solve similar problems.

• Why do we need search? http: // cs-gw. utcluj. ro/

~

anca/ iia/ why_ search. ogv ,

• Different layouts solved with different search algorithms http: // cs-gw. utcluj. ro/

~

anca/ iia/ examples. ogv ,

• Comparison of more strategies on the same layout http: // cs-gw. utcluj. ro/

~

anca/

iia/ comparison_ search_ strategies. ogv ,

• pacman and more ghosts http: // cs-gw. utcluj. ro/

~

anca/ iia/ multipacman. ogv .

8

Chapter 2

Uninformed search

This lab will introduce search algorithm and search strategies for problem-solving agents. You

will implement them in Pac-Man framework and apply them for a Position Search problem:

helping Pac-Man find a certain foot-dot. Before dwelving into this, you will do some exercises

to get familiar with Pac-Man framework.

Learning objectives for this week are:

1. Understand agents that solve problems by searching

2. Understand tree search and graph search

3. Develop uniformed search strategies: depth-first search, breadth-first search, uni-

form cost

2.1 Let’s find the food-dot. Search problem

In the first problem Pac-Man needs to find a certain food dot. It is known the initial Pac-Man

position and the position of the food-dot. The only possible actions for Pac-Man are going

North, South, East, or West.

The solution to this problem is a sequence of actions on Pacman board. The cost of the

solution is the total sum of actions’ cost. Positions (x, y) determine the state space. The layouts

described in the folder layout mention the initial Pac-Man position, the food-dot position and

walls.

Open search.py and read tinyMazeSearch function. Run the command

1 python pacman . py -l tinyMaze -p Searc hAge nt -a fn = tinyM aze Sea rch --

frameTime =1

or add a new configuration with corresponding arguments. If you want to slow down the

movement of Pac-Man, use the option frametime.

Exercise 2.1 Read the output of your previous command. How many nodes were expanded?

Which is the total cost of the found solution?

Exercise 2.2 Why the agent finds the dot? Change the maze (with another one from layouts

folder). Does it work? Does the Pacman find the food?

The problem is completely formalized in the class PositionSearchProblem from search-

Agents.py. Conceptually it is a search problem, therefore its class extends the class Search-

10

Problem. Search-based agents are able to solve search problems, meaning they are able to

compute sequences of actions for a certain layout. The most important advantage of using search

problems and search-based agents is that the agents are problem-independent. Therefore, the

same agent can solve Pacman food problem, but also eight-puzzle problem, once the problems

are modelled as search problems.

A search problem can be defined by:

• initial state

• possible actions

• transition model: Result(s,a). A successor state is a state reachable from a given state

by a single actions.

• goal test: it is true only in goal states.

• path cost

All the search problems from Pac-Man project are described in these terms. For food-dot

problem, the initial state is the initial Pacman position. Possible actions are North, South,

East, West, where for each action it is described the new position (x

0

, y

0

) reached after doing

the action from (x, y). The goal test returns true when Pacman is in the same position with

the food-dot. In the default problem, each action’s cost is 1, therefore the path’s cost is equal

to the number of actions. The solution to a search problem is a sequence of actions which if

executed from the initial state, reaches a goal state.

Exercise 2.3 Read from AIMA what are and how to formalize Search problems in sections

3.1.1 and 3.1.2.

2.2 Random search agent

The final aim of this laboratory work is to solve Pacman food problem, but before that, you

will do some exercises which help you understand the structure of the code which is relevant

for you.

Exercise 2.4 Open searchAgents.py and go to the class PositionSearchProblem. Read the

comments. Identify the main elements of a search problem: initial state, goal test, successor

and cost of actions. Identify the order in which the legal actions are returned in getSuccessors

method.

Exercise 2.5 Go to depthF irstSearch function from search.py and uncomment the existing

lines:

1 print " Start : " , problem . getStartS tat e ()

2 print " Is the start a goal ?" , problem . isGoalState ( problem .

getS tar tSt ate () )

3 print " Start ’s su cces sors : " , problem . get Suc ces sors ( problem .

getS tar tSt ate () )

Run again

1 python pacman . py -l smallMaze -p SearchAg ent

11

Figure 2.1: Initial position of Pacman in smallMaze layout

1 Start : (11 , 6)

2 Is the start a goal ? False

3 Start ’s suc cess ors : [((11 , 7) , ’North ’, 1) , ((12 , 6) , ’ East ’, 1) ,

((10 , 6) , ’West ’, 1) ]

The initial position is (11,6) and it is not a goal state (figure 2.1). problem.getSuccessors(

problem.getStartState() ) returns a list of three tuples, one for each legal action. Each tuple

contains the next state, the action and the action’s cost. Only three actions are legal due to

the fact that there is wall on south.

Exercise 2.6 Go to depthF irstSearch function from search.py. Get the succesors of the

initial state and print the state, the action and the cost for each successor. Run again with

smallMaze.

Exercise 2.7 Go to depthF irstSearch function from search.py. Comment the code from the

previous exercises. Comment util.raiseNotDefined() and similar to TinyMazeSearch, add to

depthFirstSearch function:

1 from game import Di rections

2 w = Directions . WEST

3 return [w , w ]

Run again

1 python pacman . py -l smallMaze -p SearchAg ent

Important: each search function must return a list of legal actions. Otherwise, you will get an

error.

Exercise 2.8 Go to depthF irstSearch function from search.py. Return a sequence of two

legal actions from the initial state.

Exercise 2.9 Go to depthF irstSearch function from search.py. Create a new data-structure

with two components: name and cost. Create two instances of the new data structure and add

them to a Stack described in util.py. Pop an element from the stack and print it.

Exercise 2.10 Random search agent. As a baseline, we will create an agent which searches

a solution randomly: it just picks one legal action at each step of search. Write a new search

function in search.py similar to tinyMazeSearch function. The function returns a list of

actions from the initial state to goal state, each action being randomly selected from the set of

legal actions.

12

2.3 Tree seach and graph search

The general algorithms for solving search problems [23] are described in the following listings:

1 function TREE - SEARCH ( problem ) returns a solution , or failure

2 in itialize the frontier using the initial state of problem

3

4 loop do

5 if the fron tier is empty then return failure

6 choose a leaf node and remove it from the frontier

7 if the node contain s a goal state

8 then return the corr esp onding sol ution

9 expand the chosen node , adding the resulting nodes to the frontier

1 function GRAPH - SEARCH ( problem ) returns a solution , or failure

2 init iali ze the frontier using the initial state of problem

3 init iali ze the explored set to be empty

4

5 loop do

6 if the fron tier is empty then return failure

7 choose a leaf node and remove it from the frontier

8 if the node contain s a goal state

9 then return the corr esp onding sol ution

10 add the node to the explor ed set

11 expand the chosen node , adding the resulting nodes to the frontier

only if not in the fron tier or e xplored set

We recommend using of the following structure for nodes ([23]) in tree/graph search algo-

rithm:

• state: the state in the state space to which the node corresponds;

• parent: the node in the search tree that generated this node;

• action: the action that was applied to the parent to generate the node;

• path-cost: the cost of the path from the initial state to the node

Exercise 2.11 Read from AIMA section 3.3. about Tree search and Graph search as general

methods for searching for solutions.

The strategies for choosing the node to be extended strongly influences the length of the

solution, the time and space required for searching. You must implement three uninformed

search strategies and compare their application on Pacman food-dot problem.

2.4 Depth first, breadth first and uniform costs search

strategies

Depth-first search is a tree/graph-search with the frontier as LIFO (stack). Breadth-first search

is a tree/graph-search with the frontier as FIFO (queue). Uniform cost search is a search with

the frontier as a priority queue, meaning that it expands the node with the lowest path cost

g(n).

13

1 2 3

x

1

2

3

y

123

4 5 6

7

Expanded states

1 2 3

x

1

2

3

y

Solution

Nodes added Extracted

to the frontier node from

frontier

(3, 3)

(3, 3)→(3, 2)(South)

(3, 3)→(2, 3)(West)

(2, 3)

(2, 3)→(2, 2)(South)

(2, 3)→(1, 3)(West)

(1, 3)

(1, 3)→(1, 2)(South)

(1, 2)

(1, 2)→(1, 1)(South)

(1, 2)→(2, 2)(East)

(2, 2)

(2, 2)→(3, 2)(East)

(3, 2)

(3, 2)→(3, 1)(South)

(3, 1)

No succesors which

were not already expanded

GOAL (1, 1)

Solution: West, West, South, South

1 2 3

x

1

2

3

y

123

4 5 6

789

Expanded states

1 2 3

x

1

2

3

y

Solution

Nodes added to Extracted

the frontier node from

frontier

(3, 3)

(3, 3)→(3, 2)(South)

(3, 3)→(2, 3)(West)

(2, 3)

(2, 3)→(2, 2)(South)

(2, 3)→(1, 3)(West)

(1, 3)

(1, 3)→(1, 2)(South)

(1, 2)

(1, 2)→(1, 1)(South)

(1, 2)→(2, 2)(East)

(2, 2)

(2, 2)→(2, 1)(South)

(2, 2)→(3, 2)(East)

(3, 2)

(3, 2)→(3, 1)(South)

(3, 1)

(3, 1)→(2, 1)(West)

(2, 1)

(2, 1)→(1, 1)(West)

GOAL (1, 1)

Solution: West, West, South, East, East,

South, West, West

Figure 2.2: Expanded states and solution for DFS on similar layouts: with/without wall

Exercise 2.12 Read from AIMA about uninformed search strategies: Depth first search (sec-

tion 3.4.3), breadth-first search (section 3.4.1) and uniform cost search (section 3.4.2).

Exercise 2.13 Compare the behavior of DFS on a simple layout of 3x3 with/without a wall in

position (2,1) from figure 2.2. The number on each cell indicate the order in which the positions

are explored, while the arrow indicate the actions from the solution. Think about ways to reduce

the number of expanded states and to improve the quality of the solution.

In case of breadth-first search, the goal test from graph search can be applied to each node

before inserting the element in the frontier rather then when it is extracted from the frontier.

Note that breadth-first search always has the shallowest path to every node on the frontier.

In search.py you can find depthFirstSearch, breadthFirstSearch, and uniformCostSearch

functions. In order to obtain maximum score for the activity of this lab, you need to imple-

ment these three strategies. The first three questions from the autograder check the identified

solution and the order in which the nodes are explored. You need to obtain 9 points for

14

these. Implement them as graph-searches with different types of data-structures for the fron-

tier. it is recommended to use Stack, Queue and PriorityQueue classes implemented in file

util.py. Write your code as general as possible and use methods from the SearchProblem class

getStartState, isGoalState, getSuccessors.

Observation: If you implement DFS as a graph search, there will be minor differences

between the three strategies DFS, BFS and UCS.

Exercise 2.14 Question 1 In search.py, implement Depth-First search (DFS) algorithm

in function depthFirstSearch. Don’t forget that DFS graph search is graph-search with the

frontier as a LIFO queue (Stack).

• Test your solution on more layouts:

python pacman . py -l tinyMaze -p Sea rchAgent

python pacman . py -l m ediu mMaze -p Se arch Agent

python pacman . py -l bigMaze -z .5 -p Se arch Agen t

• Are the solutions found by your DFS optimal? Explain your answer

• Run autograder python autograder.py and check the points for Question 1.

For more details, go to project page http://ai.berkeley.edu/search.html.

Exercise 2.15 Question 2 In search.py, implement Breadth-First search algorithm in

function breadthF irstSearch.

• Similar to DFS, test your code on mediumMaze and bigMaze by using the option −a fn =

bfs

1 python pacman . py -l m ediu mMaze -p Se arch Agent -a fn = bfs

• Is the found solution optimal? Explain your answer.

• Run autograder python autograder.py and check the points for Question 2.

Exercise 2.16 Question 3 In search.py, implement uniform-cost graph search algorithm in

uniformCostSearch function.

• Test it with mediumMaze and bigMaze

1 python pacman . py -l m ediu mMaze -p Se arch Agent -a fn = ucs

• Compare the results to the ones obtained with DFS. Are the solutions different? Is the

number of extended(explored) states smaller? Explain your answer.

• Consider that some positions are more desirable than others. This can be modeled by a cost

function which sets different values for the actions of stepping into positions. Identify in

searchAgents.py the description of agents StayEastSeachAgent and StayW estSearchAgent

and analyze the cost function. Why the cost .5 ∗ ∗x for stepping into (x, y) is associated

to StayW estAgent?

• Run the agents StayEastSeachAgent and StayW estSearchAgent on mediumDottedMaze

and mediumScaryMaze with uniform cost search.

15

1 python pacman . py -l me diu mDott edM aze -p Stay EastS ea rch Ag ent

2 python pacman . py -l me diu mSc ary Maze -p StayW es tSe ar chA ge nt

For more details, go to project page http: // ai. berkeley. edu/ search. html .

• Run autograder python autograder.py and check the points for Question 3.

Don’t forget, a set of commands for running and testing Pacman are included in commands.txt.

Once you implement BFS, you can test it on the eight puzzle problem with python eightpuzzle.py.

16

2.5 Solutions to exercises

Solution for exercise 2.1.

The number of expanded nodes is 0, since the sequence of actions is not computed, it is

hard-coded. The cost of the path is 8, equal with the number of required actions from

the initial position to (1,1).

Solution for exercise 2.2.

Since the sequence of actions is hard-coded, on a new layout most probable you will get

an exception “Illegal action”. For example for smallMaze, Pacman has wall in south of

its initial position, and the first actions from the sequence is South, therefore you get an

exception.

Solution for exercise 2.5.

1 print " Initial state is " , problem . getSt art Sta te ()

2 for succ in problem . ge tSu cce sso rs ( problem . getStartS tat e () ):

3 ( state , action , cost ) = succ

4 print " Next state could be " , state , " with action " , action ,

" and cost " , cost

Solution for exercise 2.8.

1 ( next_state , action , _) = problem . g etS uccessors ( problem .

getStar tSt ate () ) [0]

2 ( next_next , next_action , _) = problem . getSuccessor s ( ne xt_state )

[0]

3 print "A possible solution could start with actions " , action ,

next _act ion

4 return [ action , ne xt_action ]

5 # util . rai seN otDef ine d ()

The last line is commented because we want to see what happens when these two actions

are executed.

17

Solution for exercise 2.9.

1 node1 = Cu stomNode ( " first " , 3) # creates a new object

2 node2 = Cu stomNode ( " second " , 10)

3 print " Create a stack "

4 my_stack = util . Stack () # creates a new object of the class Stack

defined in file util . py

5 print " Push the new node into the stack "

6 my_stack . push ( node1 )

7 my_stack . push ( node2 )

8 print " Pop an element from the stack "

9 extracted = my_stack . pop () # call a method of the object

10 print " Extracted node is " , extracted . getName () ," " , extra cted .

getCost ()

11 util . rais eNo tDe fin ed ()

The class is defined also in search.py

1 class C ustomNode :

2

3 def __init__ ( self , name , cost ):

4 self . name = name # attribute name

5 self . cost = cost # attribute cost

6

7 def getName ( self ):

8 return self . name

9

10 def getCost ( self ):

11 return self . cost

Solution for exercise 2.10.

At each step in the search, the agent asks for the successors of the current state and

chooses one randomly. Remember that each successor is a tuple of state, action, cost.

The state of the chosen successor is the new current state in search, while the action is

added to the solution.

1 def r and omSearc h ( problem ) :

2 current = problem . get Sta rtS tat e ()

3 solut ion =[]

4 while ( not ( problem . isGoalStat e ( current ) )):

5 succ = problem . ge tSu cce sso rs ( current )

6 no_o f_s ucc essor s = len ( succ )

7 rand om_ succ_ index = int ( random . random () * no _of _su ccess ors )

8 next = succ [ random_ succ_ ind ex ]

9 current = next [0]

10 solution . append ( next [1])

11 print " The solution is " , solution

12 return solution

Add rs = randomSearch to the end of search.py and run with option −a fn = rs

1 python pacman . py -l tinyMaze -p Searc hAge nt -a fn = rs

18

Chapter 3

Informed search

What can an agent do when no single action will achieve its goal? SEARCH. Although BFS,

DFS and UCS are able to find solutions, they do not do it efficiently. They are called uninformed

algorithms since they use only problem definition and no other information. The search can

be reduced in many situations with some guidance on where to look the solutions. Informed

algorithms use additional information about the problem in order to reduce the search.

Learning objectives for this week are:

1. To implement A

∗

algorithm

2. To compare search strategies: DFS, BFS, UCS, A

∗

3. To formulate your own search problem

4. To define admisible and consistent heuristics for A

∗

3.1 A* algorithm

Informed search strategy uses problem-specific knowledge beyond the definition of the problem

itself. Best-first search is Graph-search in which a node is selected for expansion based on an

evaluation function. Evaluation function f is an estimation of the real cost.

A

∗

algorithm is the most widely known best-first search algorithm. The evaluation of a

node combines the cost to earch the node from the initial state with the estimated cost to get

from the node to the goal.

f(n) = g(n) + h(n)

h(n) is the heuristic. An admisible and consistent heuristic guarantees the optimality of A

∗

.

3.2 Admissible and consistent heuristics

An admissible heuristic never overestimates the cost to reach the goal. A consistent heuristic

meets the following condition for each nodes n and n

0

:

h(n) ≤ c(n, a, n

0

) + h(n

0

)

Exercise 3.1 Read from AIMA section 3.5.2 - Minimizing the total estimated solution cost.

19

1 2 3 4

x

1

2

3

y

P

q

(3 − 1)

2

+ (3 − 1)

2

1 2 3 4

x

1

2

3

y

P

|3 − 1|+ |3 − 1|

Figure 3.1: Euclidian and Manhattan Distance

For Pacman food-dot problem, good heuristic are Euclidian and Mahattan distances between

two positions (see figure 3.1). Let’s analyze the behaviour of A

∗

with Manhattan Distance as

heuristic on the layout 4x3 from figure 3.1, with Pacman in (3, 3) and food-dot in (1, 1) .

n

th

Nodes added to frontier Expanded g h f

1 (3, 3)

(3, 3)→(3, 2)(South) 1 3 4

(3, 3)→(4, 3)(East) 1 5 6

(3, 3)→(2, 3)(West) 1 3 4

2 (3, 2)

(3, 2)→(3, 1)(South) 2 2 4

(3, 2)→(4, 2)(East) 2 4 6

(3, 2)→(2, 2)(West) 2 2 4

3 (2, 3)

(2, 3)→(2, 2)(South) 2 2 4

(2, 3)→(1, 3)(West) 2 2 4

4 (3, 1)

(3, 1)→(4, 1)(East) 3 3 6

5 (2, 2)

(2, 2)→(1, 2)(West) 3 1 4

6 (1, 3)

(1, 3)→(1, 2)(South) 3 1 4

7 (1, 2)

(1, 2)→(1, 1)(South) 4 0 4

8 (1, 1)

Solution: West, South, West, South

1 2 3 4

x

1

2

3

y

5

4

3

4

3

3

2

2

2

1

0

Heuristic values

1 2 3 4

x

1

2

3

y

1

2

3

4

5

6

7

8

Expanded states

1 2 3 4

x

1

2

3

y

Solution

Figure 3.2: A

∗

algorithm on simple layout. Heuristic Values, Expansion order, Solution.

In figure 3.2 you can observe the order in which the nodes are added into and extracted

from the frontier. You can observe also that the optimal solution has cost 4. During the search,

the cost of the paths increases, while the heuristic value decreases as we get closer to the goal.

The value of every heuristic in goal must be zero. None of the states on the right of the initial

position of Pacman are expanded, since their value for f = 6 is greater than the cost of the

solution 4. Many states with f value equal to the cost of the solution are expanded. In case

there are more states on the frontier with the same f value, any state can be extracted.

Exercise 3.2 Question 4. Go to aStarSearch in search.py and implement A

∗

search algo-

rithm. A

∗

is graphs search with the frontier as a priorityQueue, where the priority is given by

the function g = f + h

20

• Test your implementation by searching for a solution for finding a food dot with the use

of Manhattan Distance heuristic by using the option heuristic

1 python pacman . py -l bigMaze -z .5 -p Sear chAg ent -a fn = astar ,

heuristic = manhatt anHeu risti c

• Does A

∗

and UCS find the same solution or they are different?

• Does A

∗

finds the solution with fewer expanded nodes than UCS?

• Test with autograder python autograder.py that you obtain 3 points for Question 4.

For more details, go to the project’s page http://ai.berkeley.edu/search.html#Q4

3.3 Finding all corners. Eating all food

A

∗

algorithm is a very powerful algorithm. In order to prove this, you will work with another

two search problems: firstly, Pacman needs to find all four corners, secondly, Pacman needs to

eat all the food-dot available. Don’t forget the walls. When it comes to finding all corners, you

will practice your ability to describe search problems. Then, you will deal with the efficiency

of heuristics. Better the heuristics, lesser the number of expanded nodes. You are asked to

propose heuristics for both problems: all corners and all food-dot.

Exercise 3.3 Question 5. Pacman needs to find the shortest path to visit all the corners,

regardless there is food dot there or not. Go to CornersProblem in searchAgents.py and

propose a representation of the state of this search problem. It might help to look at the exist-

ing implementation for P ositionSearchP roblem. The representation should include only the

information necessary to reach the goal. Read carefully the comments inside the class Corner-

sProblem.

• Test your implementation with BFS - remember that BFS finds the optimal solution in

number of steps (not necessarilly in cost). The cost for each action is the same for this

problem.

1 python pacman . py -l tin yCor ners -p Se arch Agent -a fn = bfs , prob =

CornersProblem

2 python pacman . py -l medium Cor ner s -p S earc hAge nt -a fn = bfs , prob =

CornersProblem

For hint and more details, go to the project’s page http: // ai. berkeley. edu/ search.

html# Q5 .

For mediumCorners, BFS expands a big number - around 2000 search nodes. It’s time to

see that A

∗

with an admissible heuristic is able to reduce this number.

Exercise 3.4 Question 6. Implement a consistent heuristic for CornersProblem. Go to the

function cornersHeuristic in searchAgent.py.

• Test it with

1 $ python pacman . py -l mediumC orn ers -p SearchA gent -a fn =

aStarSearch , prob = CornersProblem , heuri stic = corners Heu risti c

2 or

3 $ python pacman . py -l mediumC orn ers -p AS tar Co rne rsA ge nt -z 0.5

21

The heuristic is tested for being consistent, not only admissible.

Grading depends on the number of nodes expanded with your solution.

Number of nodes expanded Grade

more than 2000 0/3

at most 2000 1/3

at most 1600 2/3

at most 1200 3/3

Exercise 3.5 Question 7 Propose a heuristic for the problem of eating all the food-dots. The

problem of eating all food-dots is already implemented in F oodSearchP roblem in searchAgents.py.

• Test your implementation of A

∗

on F oodSearchP roblem by running

1 python pacman . py -l testSear ch -p AStar Fo odS ea rchAg ent

2 identica l to

3 python pacman . py -l testSear ch -p SearchAg ent -a fn = astar , prob =

FoodSearchProblem , he urist ic = fo odH eur ist ic .

The existing heuristic foodHeuristic returns 0 for each node (trivial heuristic), therefore

the previos running is the same with UCS. For the layout testSearch the optimal solution

is of length 7, while for tinySearch layout is of length 27. The number of expanded nodes

for tinySearch is very large: around 5000 nodes.

• Go to foodHeuristic function in searchAgents.py and propose an admissible and consis-

tent heuristic. Test it on trickySearch layout. On this layout, UCS explores more than

16000 nodes.

• Test with autograder python autograder.py. Your score depends on the number of

expanded states by A

∗

with your heuristic.

Number of expanded nodes Grade

more than 15000 1/4

at most 15000 2/4

at most 12000 3/4

at most 9000 4/4

at most 7000 5/4

For maximum score, you need to get in autograder 3 points for Question 4, 3 points for Question

5, 3 points for Question 6, and 4 points for Question 7.

Exercise 3.6 For F oodSearch problem, compare the results of the application of your solutions

on different layouts and different algorithms. You can also propose new layouts.

Layout Algorithm No of Expanded nodes Path cost Time

tinySearch DFS 59 41 0.0s

UCS 5057 27 3.1s

A

∗

974 27 0.6s

trickySearch

....

22

3.4 Solutions to exercises

Solution for exercise 3.2.

Pay attention to

• the number of arguments of aStarSearch function

• the number of arguments of the heuristics: two heuristics are defined for Position-

SearchProblem in searchAgents.py - Manhattan Heuristic and Euclidian Heuristic

• the real cost of a node from the initial state does not depend on the heuristic; only

the path from the initial state to the goal state through that node depends on the

heuristic

• if you already implemented DFS or UCS as graph search, for A

∗

the changes should

relate mainly to the frontier

The answer should be yes for the question whether A

∗

finds the solution with fewer

expanded nodes than UCS. For bigMaze, you could get 549 with A

∗

vs. 620 with UCS

search nodes expanded, but these values can differ according to the order you put into

the frontier the successor nodes.

23

Chapter 4

Adversarial search

Learning objectives for this week are:

1. To understand how optimal decision can be taken in multi-agent environment

2. To implement evaluation functions of a state in Pacman game with ghosts

3. To implement MINIMAX algorithm and Alpha-beta prunning

We will extend the Pacman scenario with multi-agents. That is, we will consider also

the ghosts. Download the multiagent framework for Pacman from https://s3-us-west-2.

amazonaws.com/cs188websitecontent/projects/release/multiagent/v1/002/multiagent.

zip. The description of the project is at http://ai.berkeley.edu/multiagent.html.

Pacman can do five actions: North, South, East, W est, and Stop. Pacman can eat power

pellet and scare the ghosts: for a certain number of moves, the ghosts will be scared and Pacman

can eat them. Rules for the score are: eating one food: +10 points, win/loose +500/-500 points,

each time step: -1 point. All the rules are defined in class P acmanRules from pacman.py.

Exercise 4.1 Read the comments in class GameState from pacman.py. The class specifies

the full game state.

Exercise 4.2 Short python exercise: use list comprehension for computing the following sets:

S = {x

2

|x ∈ {0...9}}

T = {1, 13, 16}

M = {x|x ∈ S and x ∈ T and x even}

4.1 Reflex agent

We create here a reflex agent which chooses at its turn a random action from the legal ones.

Note that this is different from the random search agent, since a reflex agent does not build a

sequence of actions, but chooses one action and executes it. The random reflex agent appears

in listing 4.1.

Listing 4.1: Random reflex agent

1 class R andomAgent ( Agent ):

2

24

3 def getAction ( self , gameState ) :

4 leg alMo ves = gam eState . ge tLe gal Act ions ()

5 # Pick randomly among the legal

6 chosen Inde x = random . choice ( range (0 , len ( legalMov es ) ))

7 return legalMove s [ chosen Inde x ]

Exercise 4.3 Add the class RandomAgent to multiagent.py. Run it with:

1 python pacman . py -p R andomAgent -l testCla ssic

In some situations, Pacman wins, but most of the time it looses. A better reflex agent is

already described in multiagent.py.

Exercise 4.4 Run and analyze Ref lexAgent from multiagent.py.

1 python pacman . py -p Ref lexA gent

2 python pacman . py -p Ref lexA gent -l te stCl assic

Exercise 4.5 Read the content of functions getAction, evaluationF unction, scoreEvaluation−

F unction.

This reflex agent chooses its current action based only on its current perception. The

ReflexAgent gets all its legal actions, computes the scores of the states reachable with these

actions and selects the states that results into the state with the maximum score. In case more

states have the maximum score, it will choose randomly one. The agent still looses many times.

Exercise 4.6 Think about how you could improve the agent by using the variables newF ood,

newGhostState, newScaredT imes available for all the successor states. Print these variables

in function evaluationF unction of the ReflexAgent.

Exercise 4.7 For each legal action, print the position of Pacman, the position of ghosts, and

the distance between Pacman and the ghosts.

Exercise 4.8 Question 1. Improve the ReflexAgent such that it selects a better action. Include

in the score food locations and ghost locations. The layout testClassic should be solved more

often.

• Test your solution on testClassic layout.

1 python pacman . py -p Ref lexA gent -l te stCl assic

• You can speed up the animation with the option f rameT ime. The number of ghost is

given with option k.

1 python pacman . py -- frameT ime 0 -p R efle xAge nt -k 1 -l medium Cla ssi c

2 python pacman . py -- frameT ime 0 -p R efle xAge nt -k 2 -l medium Cla ssi c

For an average evaluation function, the agent will loose for two ghosts.

• Test your solution with autograder for Question 1.

1 python auto grad er . py -q q1

25

or

1 python auto grad er . py -q q1 --no - graphics

Grading is computed as follows

Out of 10 runs on openClassic layout:

0 the agent times out or looses all the time

1 the agent wins at leat 5 times

2 the agent wins all 10 games

+1 the average score is > 500

+2 the average score is > 1000

More details can be found at http: // ai. berkeley. edu/ multiagent. html .

4.2 Minimax algorithm

In case the world where the agent plans ahead includes other agents which plan against it,

adversarial search can be used. One agent is called MAX and the other one MIN. Utility(s, p)

(called also payoff function or objective function) gives the final numeric value for a game that

ends in terminal state s for player p. For example, in chess the values can be +1, 0,

1

2

. The

game tree is a tree where the nodes are game states and the edges are moves. MAX’s actions

are added first. Then, for each resulting state, the action of MIN’s are added, and so on. A

game tree can be seen in figure 4.1.

Optimal decisions in games must give the best move for MAX in the initial state, then

MAX’s moves in all the states resulting from each possible response by MIN, and so on.

Minimax value ensures optimal strategy for MAX. The algorithm for computing this value is

given in listing 4.2. For the agent in figure 4.1, the best move is a

1

and the minimax value of

the game is 3 (see chapter 5 from AIMA).

MINIMAX(s) =

=UTILITY(s) if TERMINAL-TEST(s)

=max

a∈Actions(s)

MINIMAX(RESULT(s,a)) if PLAYER(s)= MAX

=min

a∈Actions(s)

MINIMAX(RESULT(s,a)) if PLAYER(s)=MIN

Listing 4.2: Minimax algorithm

1 f u n c t i o n MINIMAX−DECISION ( s t a t e ) r e t u r n s an a c t i o n

2 return arg max

a∈ACT I ON S(state)

MIN−VALUE(RESULT( st a t e , a ) )

3

4 f u n c t i o n MAX−VALUE( s t a t e ) r e t u r n s a u t i l i t y va l ue

5 i f TERMINAL−TEST( s t a t e ) then return UTILITY( s t a t e )

6 v ← −∞

7 for each a i n ACTIONS( s t a t e ) do

8 v ← MAX(v , MIN−VALUE(RESULT( st a t e , a ) ) )

9 return v

10

11 f u n c t i o n MIN−VALUE( s t a t e ) r e t u r n s a u t i l i t y v a lu e

12 i f TERMINAL−TEST( s t a t e ) then return UTILITY( s t a t e )

13 v ← ∞

14 for each a i n ACTIONS( s t a t e ) do

15 v ← MIN( v ,MAX−VALUE(RESULT( s t a t e , a ) ) )

16 return v

26

Figure 4.1: Minimax algorithm for two agents

Result(state, a) is the state which results from the application of action a in state. Minimax

algorithm generates the entire game search space. Imperfect real-time decisions involve the use

of cutoff test based on limiting the depth for the search. When the CUTOFF test is met, the

tree leaves are evaluated using an heuristic evaluation function instead of the utility function.

H-MINIMAX(s,d) =

=EVAL(s) if CUTOFF-TEST(s, d)

=max

a∈Actions(s)

H-MINIMAX(RESULT(s,a), d+1) if PLAYER(s)= MAX

=min

a∈Actions(s)

H-MINIMAX(RESULT(s,a), d+1) if PLAYER(s)=MIN

Exercise 4.9 Question 2. Implement H-Minimax algorithm in MinimaxAgent class from

multiAgents.py. Since it can be more than one ghost, for each max layer there are one or

more min layers.

• Test your implementation at certain depth and layouts:

1 python pacman . py -p Mini maxAgen t -l m ini max Cla ssi c -a depth =4

• Test your implementation with autograder for Question 2

1 python autograder .py -q q2

For more hints go to http: // ai. berkeley. edu/ multiagent. html .

Exercise 4.10 Test Pacman on trappedClassic layout and try to explain its behaviour.

1 python pacman . py -p Mini maxAgen t -l t rap ped Cla ssi c -a depth =3

Why Pacman rushes to the ghost? For random ghosts minimax behaviour could be improved.

4.3 Alpha-beta prunning

In order to limit the number of game states from the game tree, alpha-beta α −β prunning can

be applied, where

α = the value of the best (highest value) choice there is so far at any choice point along the

path for MAX

β = the value of the best (lowest-value) choice there is so far at any choice point along the

path for MIN

27

Figure 4.2: Alpha-beta prunning

28

Listing 4.3: Alpha-beta prunning.

1 f u n c t i o n ALPHA−BETA−SEARCH ( s t a t e ) r e t u r n s an a c t i o n

2 v ← MAX−VALUE( st a t e , −∞, ∞)

3 return the a c t i o n i n ACTIONS( s t a t e ) with v al u e v

4

5 f u n c t i o n MAX−VALUE( s t a t e , α, β ) r e t u r n s a u t i l i t y va l ue

6 i f TERMINAL−TEST( s t a t e ) then return UTILITY( s t a t e )

7 v ← −∞

8 f o r each a i n ACTIONS( s t a t e ) do

9 v ← MAX( v , MIN−VALUE(RESULT( s , a ) , α, β ) )

10 i f v ≥ β then return v

11 α ← M AX(α , v )

12 return v

13

14 f u n c t i o n MIN−VALUE( s t a t e , α, β ) r e t u r n s a u t i l i t y va lu e

15 i f TERMINAL−TEST( s t a t e ) then return UTILITY( s t a t e )

16 v ← +∞

17 f o r each a i n ACTIONS( s t a t e ) do

18 v ← MIN( v , MAX−VALUE(RESULT( s , a ) , α, β ) )

19 i f v ≤ α then return v

20 β ← M IN(β , v )

21 return v

Exercise 4.11 Question 3. Use alpha-beta prunning in AlphaBetaAgent from multiagents.py

for a more efficient exploration of minimax tree.

• Test your implementation of smallClassic layout. Similar to exercise 4.9, there is one

MAX agent and possible more MIN agents. alpha − beta prunning with depth 3 will

run comparable to minimax at depth 2. On smallClassic the time should be at most

a few seconds per move.

1 python pacman . py -p AlphaBetaAgent -a depth =3 -l small Classic

• Test your implementation with autograder for Question 3.

1 python autograder .py -q q3

or

1 python autograder .py -q q3 --no - graphics

Don’t forget that the minimax value obtained with alpha beta prunning is the same with

the value obtained for minimax algorithm (both at the same depth). One constraint given

by the autograder is that you must not prune on equality. In theory, you can also allow for

prunning on equality and invoke alpha-beta once on each child of the root node.

In order to obtain the maximum score you must obtain 14 for the first three questions.

29

4.4 Solutions to exercises

Solution for exercise 4.2.

1 s =[ x **2 for x in range (0 ,9) ]

2 t =[1 ,13 ,16]

3 m =[ x for x in s if x % 2 == 0 and x in t ]

4 s

5 # [0 , 1 , 4, 9, 16 , 25 , 36 , 49 , 64]

6 m

7 # [16]

Solution for exercise 4.7.

Add the following lines into evaluationF unction of ReflexAgent:

1 di sta nceToGho sts = [ m anh attan Dis tance ( newPos , gp ) for gp in

suc cesso rGame Sta te . getG ho stP ositi ons () ]

2 print " New position " , newPos

3 print " Ghost posit ions " , s uc ces so rGa meSta te . getG hostP osi ti ons ()

4 print " Di stance to ghosts " , d ist anceT oGh ost s

The Grid class (used for food) has the method asList() which returns a list of positions.

Observations for exercise 4.9

One Pacman move together with all ghost responses make one ply in the game tree. So

a search of depth two involves Pacman and ghosts moving two times.

The function getAction from MinimaxAgent class returns the minimax action from the

current game state with depth having the value self.depth. You must be able to limit

the game tree to an arbitrary depth mentioned with option −a depth = 4. The depth is

stored in self.depth attribute. In order to give the score for the leaves of the minimax

tree use self.evaluationF unction.

It is normal Pacman to lose in some cases. For layout minimaxClassic the minimax

values of the initial state are: 9, 8, 7, −492 for depths 1, 2, 3, and 4.

30

Chapter 5

Propositional logic

This lab will begin presenting how proofs can be build automatically, starting from a set of

axioms assumed to hold. Programs which achieve this are called automated theorem provers,

or just provers for short. The one we will use to illustrate the idea is called Prover9 [18].

Learning objectives for this week are:

1. To see the structure of a Prover9 input file corresponding to a problem

2. To understand the output returned by the prover

3. To be able to explain, step by step, the proof obtained

5.1 Getting started with Prover9 and Mace4

Prover9 searches for proofs; Mace4, for counterexamples. The sentences they operate on could

be written in propositional, first-order or equational logic. This first lab is concerned with

sentences written solely in propositional logic. Here, we have true or false propositions, like P

or Q, but no sentences of type ∀xP (x).

Installing Prover9 and Mace4

Download the current command-line version of the tool (LADR-2009-11A), which is available

at https://www.cs.unm.edu/

~

mccune/mace4/download/LADR-2009-11A.tar.gz. Unpack it,

change directory to LADR-2009-11A, then type make all and follow the instructions on the

screen.

Do not forget to add the bin folder to you PATH, such that you should be able to start

Prover9 by simply typing prover9 in a terminal, regardless your current directory.

5.2 First example: Socrates is mortal

Let us assume the following hold:

1. If someone’s name is Socrates, then he must be a human.

2. Humans are mortal.

3. The guy over there is called Socrates.

Now try to prove that Socrates is a mortal.

31

The assumptions in this tiny, well known knowledge base could be represented formally using

different types of logics. Right now, we employ the simplest one, called Propositional Logic,

which comprises variable names for sentences plus a set of logical operators like ∧, ∨, ¬, →, ↔.

In our example, we’ll use the following set of sentences/propositions:

S: The guy over there is called Socrates

H: Socrates is a human

M: Socrates is mortal

The Prover9 input file containing the implementation is presented in Listing 5.1.

Listing 5.1: Knowledge on Socrates’ mortal nature

1 a s s i g n ( max seconds , 3 0 ) .

2 s e t ( b i n a r y r e s o l u t i o n ) .

3 s e t ( p r i n t g e n ) .

4

5 % 1 . I f someone ’ s name i s S o c ra t e s , then he must be a human .

6 % 2 . Humans are mortal .

7 % 3 . The guy ove r t h e r e i s c a l l e d S o c r a t e s .

8 % 4 . Prove th at S o c r a t e s i s a mortal .

9

10 % S : the guy i s c a l l e d S o c r a t e s

11 % H: S o c r a t e s i s a human

12 % M: S o c r a t e s i s mortal

13

14

15 for mu l as ( as sumptions ) .

16 S .

17 S −> H.

18 H −> M.

19 e n d o f l i s t .

20

21 for mu l as ( g o a l s ) .

22 M.

23 e n d o f l i s t .

Exercise 5.1 Type prover9 -f socrates.in in order to run Prover9 with socrates.in as

an input file. Redirect the output to socrates.out and examine it. Have you obtained a proof

of your goal? (the text Exiting with 1 proof or alike in the file/on the screen should indicate

this).

Input file explained

The input files for Prover9 comprise some distinct parts. In this Section, we will explain the

meaning of each part in the input file for the example in Listing 5.1.

The first part contains some flags. For example, assign(max seconds, 30) limits the pro-

cessing time at 30 seconds, while set(binary resolution) allows the use of the binary resolu-

tion inference rule (clear(binary resolution) would do the opposite). Writing set(print gen)

instructs Prover9 to print all clauses generated while searching for the proof. For now, we don’t

focus on flags, just assume they are given in each exercise. Further, when we might get to fine

tuning them, we’ll use at the comprehensive description provided in the All Prover9 Options

of the online manual (https://www.cs.unm.edu/ mccune/mace4/manual/2009-11A/).

Comment lines start with the % symbol.

The part between formulas(assumptions) and the corresponding end of list contains

the actual knowledge base, i.e., the sentences which are assumed to be true. Sentence S means,

as already mentioned, that the guy is called Socrates, while S → H says that if you are Socrates,

32

then you are a human. You can use − for negation, & for logical conjunction and | for logical

disjunction. Each sentence (called formula) ends with a dot.

The part between formulas(goals) and the corresponding end of list state the goal the

prover must demonstrate, namely M in our case.

Note: By default, Prover9 uses names starting with u, v, w, x, y, z to represent variables in

clauses; thus, for the time being, please avoid using sentence names starting with them.

Output file explained

The output file produced (i.e., socrates.out) starts with some information on the running

process, a copy of the input and a list of the formulas that are not in clausal form. Then,

these clauses are processed according to the algorithm for transforming them into the Clausal

Normal Form and we get:

4 S. [assumption].

5 -S | H. [clausify(1)].

6 -H | M. [clausify(2)].

7 -M. [deny(3)].

Please remember the equivalence between P → Q and ¬P ∨Q, which is used for instance in

line 5. This is what clausify does. One should also notice in line 7 the goal has been added in

the negated form ¬M. This is called reductio ad absurdum: if one both accepts the axioms and

denies the conclusion, then a contradiction will be inferred. Prover9 actually searches for such

a contradiction by repeatedly applying inference rules over the existing clauses till the empty

clause is obtained.

The SEARCH section of the output lists all clauses inferred during search for the proof.

The PROOF section shows just those which actually helped in building the demonstration.

For the example above:

1 S -> H # label(non_clause). [assumption].

2 H -> M # label(non_clause). [assumption].

3 M # label(non_clause) # label(goal). [goal].

4 S. [assumption].

5 -S | H. [clausify(1)].

6 -H | M. [clausify(2)].

7 -M. [deny(3)].

8 H. [resolve(5,a,4,a)].

9 -H. [resolve(7,a,6,b)].

10 $F. [resolve(9,a,8,a)].

Lines 1-4 are the original ones, 5 and 6 are the clausal forms of 1 and 2, while 7 is the

goal denial. Line 8 shows how the resolution is applied over clauses 5 and 4 (Prover9 calls this

”binary resolution”). Propositional resolution inference rules says that if we have P ∨ Q and

¬Q ∨ R, we can infer P ∨ R. The first literal in clause 5 (−S, hence the index a) and the first

literal in clause 4 are ”resolved” and the inferred clause is H. If P is absent, this inference rule

is called ”unit deletion”, or, if R is absent, ”back unit deletion”. Line 10 shows the derived

contradiction.

Exercise 5.2 Add a clause specifying that Socrates is a philosopher (use sentence symbols P

for ”philosopher”). Run Prover9 again and take a look at the generated clauses and at the

produced proof. Is the set of generated clauses different in this case? How about the proof?

33

5.3 A more complex example: FDR goes to war

Given:

1. If your name is FDR, then you are a politician

1

.

2. The name of the guy addressing the Congress is FDR.

3. A politician can be isolationist or interventionist

2

4. If you are an interventionist, then you will declare war.

5. If you are an isolationist and your country is under attack, then you will also declare war

6. The country is under attack.

we intend to prove that war will be declared.

Exercise 5.3 Implement the knowledge above, save it in the file fdr-war.in and use Prover9

to show that war will be declared. Then, try to prove that war will not be declared. Have you

succeeded both times?

Exercise 5.4 Let us add now the following piece to our knowledge base: the country is not

under attack (-attack). The knowledge base contains a contradiction now. Let’s try to show

that war will be declared. Then, try to prove that war will not be declared. Have you succeeded

both times?

As mentioned earlier, Prover9 seeks for a contradiction in the body of clauses which incor-

porates the initial knowledge base and the negated conclusion. If the initial knowledge base is

consistent (with no contradiction), then the only source of contradiction would be the negate

conclusion which has just been added. But, if the initial knowledge base already contains a

contradiction, then Prover9 will eventually derive it, no matter what the added conclusion

might be. So, in this case, everything would be provable. Mace4 can help to detect if there

exists at least one model of a knowledge base, which would mean it is not contradictory. For

example, if no -attack is present in the knowledge base, we can comment out the goal line

and do mace4 -c -f fdr-war.in and, if we get a model of the knowledge base, then we can

be sure it contains no contradiction. Listing 5.2 shows such a model.

Listing 5.2: A model for FDR knowledge base example

1 i n t e r p r e t a t i o n ( 2 , [ number=1, s ec o nd s =0] , [

2

3 r e l a t i o n ( attac k , [ 1 ] ) ,

4

5 r e l a t i o n ( dec l a r e war , [ 1 ] ) ,

6

7 r e l a t i o n ( fdr , [ 1 ] ) ,

8

9 r e l a t i o n ( i n t e r v e n t i o n i s t , [ 0 ] ) ,

10

11 r e l a t i o n ( i s o l a t i o n i s t , [ 1 ] ) ,

12

13 r e l a t i o n ( p o l i t i c i a n , [ 1 ] )

14 ] ) .

1

During Franklin Delano Roosevelt (FDR)’ Presidency, the American public was divided between interven-

tionists and isolationists: people who supported, respectively rejected the US involvment in WWII.

2

Usually you can’t be both, but some people can.

34

Breeze Breeze

Breeze

Breeze

Breeze

Stench

Stench

Breeze

PIT

PIT

PIT

1 2 3 4

1

2

3

4

START

Gold

Stench

Figure 5.1: Wumpus world.

In the output produced by Mace4, relation(attack [1]) means that in the model built,

attack has value TRUE ([0] would mean FALSE). The current model number is specified by

number.

Given a knowledge base KB and a goal G, the ideal situation is to have at least one model

for KB and no model for KB ∧ ¬G. This means that G could be proved based on KB alone.

If KB has no models, Pover9 can find a proof for both G and −G. On the other hand, if a

model for KB ∧ ¬G is found, then no proof for G can be build based on KB alone. In this

latter case, we will say we found counterexample. Mace4 can do this job, maybe in the same

time with Prover9 searching for a proof.

5.4 Wumpus world in Propositional Logic

A 4x4 grid contains one monster called wumpus and a number of pits [23]. Pits must be avoided

and so should be the wumpus room as long as this is alive. We say a room is safe if you can find

neither a pit, nor a live wumpus inside. Squares adjacent to wumpus are smelly, ans so is the

square with the wumpus. Squares adjacent to a pit are breezy. Glitter iff gold is in the same

square. Shooting kills wumpus if you are facing it. Shooting uses up the only arrow. Grabbing

picks up gold if in same square. Releasing drops the gold in same square.

Exercise 5.5 We will use the chessboard-like notation for this problem. Sentence −Sij will

mean there is no smell in the square on column i, line j. Let us assume the agent knows −S11,

−S21 and S12. He also knows −B11, −B12, B21. Write down sentences to express ”if there

is a wumpus in (1,2), then there is a smell in (1,1)”. Write down sentences to express ”if I

can smell something in (1,1), then there is a wumpus in (1,2) or in (2,1)”. Can you prove the

wumpus is in (1,3)?

35

Exercise 5.6 The agent is in the initial state (corner (1,1)) and perceives no smell and no

breeze. No other perceptions are available, so you should drop all of them from your knowledge

base. Can you prove now the following squares are safe for the agent to go there: (1,2); (2,1);

(2,2)? Let’s assume the agent goes in one of the squares he has proven to be safe and gets some

perceptions from there. List the whole set of rooms which could be proven to be safe, given the

new information available. Repeat the process until no safe room can be added.

Exercise 5.7 Write down a rule which says: a room is safe if there is no pit in it and there is

no alive wumpus in it. Can you prove room (1,3) is safe, using only percepts obtained from safe

rooms? Can you write enough rules for the agent to safely navigate among the whole castle,

unti he gets the gold?

36

5.5 Solutions to exercises

Solution to exercise 5.2 Yes - P is also inferred, even if not relevant. No - the proof

remains the same, as only some of the generated sentences are part of it and P is not

among them.

Solution to exercise 5.3 You should be able to automatically prove the sentence ”War will

be declared”, but not the sentence ”War will not be declared”. The message ”SEARCH

FAILED” and ”Exiting with failure” should be seen on the screen. That means Prover9

has stopped searching and failed to find a proof.

Solution to exercise 5.5

Listing 5.3: First wumpus problem

1 a s s i g n ( max seconds , 3 0 ) .

2

3 for mu l as ( as sumptions ) .

4 −S11 .

5 −S21 . %c o l o n 2 , l i n e 1

6 S12 .

7 −B11 .

8 B21 .

9 −B12 .

10 W11 −> S11 .

11 W11 −> S12 .

12 W11 −> S21 .

13 W12 −> S12 .

14 W12 −> S11 .

15 W12 −> S22 .

16 W12 −> S13 .

17 W21 −> S21 .

18 W21 −> S11 .

19 W21 −> S22 .

20 W21 −> S13 .

21 W22 −> S22 .

22 W22 −> S21 .

23 W22 −> S12 .

24 W22 −> S23 .

25 W22 −> S32 .

26 W13 −> S13 .

27 W13 −> S14 .

28 W13 −> S23 .

29 W13 −> S12 .

30 W31 −> S31 .

31 W31 −> S21 .

32 W31 −> S32 .

33 W31 −> S41 .

34 S12 −> W11 | W22 | W13 | W12.

35 e n d o f l i s t .

36

37 for mu l as ( g o a l s ) .

38 W13.

39 e n d o f l i s t .

37

Chapter 6

Models in propositional logic

The problem of efficiently finding models for formulas is present in many fields belonging to

Computer Science and particularly in Artificial Intelligence.

Learning objectives for this week are:

1. To understand how to encode knowledge in a form suitable for Prover9 and Mace4

2. To see how obtaining models for a given set of clauses is connected with building

proofs

Henceforth, we will use for our experiments a knowledge base named KB, comprising one

or more clauses connected by a logical AND. We will focus on testing whether KB has at least

one model (a set of assignments of truth values to its propositional variables which make it

true) and whether a given goal sentence g could be proven based on the KB content.

We say a proposition is satisf iable if it has at least one model; otherwise, it is unsatisfiable.

A proposition is valid if it is true no matter the truth values assigned to its components.

6.1 Formalizing puzzles: Princesses and tigers

We will start with formalizing a logical puzzle described in [22], who borrowed it from [24].

A prisoner is given by the King holding him the opportunity to improve his situation if he

solves a puzzle. He is told there are three rooms in the castle: one room contains a lady and

the other two contain a tiger each. If the prisoner opens the door to the room containing the

lady, he will marry her and get a pardon. If he opens a door to a tiger room though, he will be

eaten alive. Of course the prisoner wants to get married and be set free than being eaten alive.

The door of each room has a sign bearing a statement that may be either true or false. The

sign on the door of the room containing the lady is true and at least one of the signs on the

doors of the rooms containing tigers is false. The signs say respectively:

• (The sign on the door of room #1): There is a tiger in room #2.

• (The sign on the door of room #2): There is a tiger in this room.

• (The sign on the door of room #3): There is a tiger in room #1.

Exercise 6.1 At a job interview, you are asked to solve this puzzle. Can you tell in which

room the lady is?

38

Let’s try to formalize what we know as an input for Prover9/Mace4, except won’t specify

the goal we intend to prove (a.k.a. the conjecture). Once done this, we will use Mace4 to see

how many models it can find. If we obtain just one model (which is usually the case when

dealing with this type of puzzles), we can see the solution immediately; for example, we will

know the lady is in room #1. Then, we can add this finding as a goal to the input file and

use Prover9 to build a proof for it. If we end up with more than one model, there is still hope:

either in all of them the lady is in the same room, or we can ask for more clues.

Exercise 6.2 How can we express the fact that there exists a lady in the castle? Write this

information alone in the oneLadyTwoTigers-step1.in file, then call Mace4 to generate as

many models as it can for this tiny KB. The command you need is: mace4 -c -n 2 -m -1

-f oneLadyTwoTigers-step1.in | interpformat. The -f option specifies the input file for

Mace4; -m -1 tells to generate as many models as possible; -n 2 specifies a model complexity

measure, while -c asks Mace4 (for compatibility purposes) to ignore Prover9 options it does

not understand. The interpformat command is used here for pretty printing the models. How

many models do you get? How come?

Now, we will tell the system the lady is unique (i.e., there is only one lady in the castle).

The knowledge base looks like in listing 6.1:

Listing 6.1: There is precisely one lady in the castle.

1 for mu l as ( as sumptions ) .

2 %t h e r e i s a la dy i n room 1 , 2 or 3

3 l1 | l 2 | l 3 .

4 %no la dy i n more than 1 room ; t h e p r i n c e s s i s u n iq u e

5 l1 −> −l 2 .

6 l1 −> −l 3 .

7 l2 −> −l 1 .

8 l2 −> −l 3 .

9 l3 −> −l 1 .

10 l3 −> −l 2 .

11 e n d o f l i s t .

12

13 for mu l as ( g o a l s ) .

14 e n d o f l i s t .

Exercise 6.3 How many models for the new KB does Mace4 produce now?

We go on by specifying that there are 2 tigers in the castle, in separate rooms, and there is

no tiger in the room where the lady stays (see listing 6.2).

Listing 6.2: The lady and the tigers stay in separate rooms.

1 for mu l as ( as sumptions ) .

2 %t h e r e i s a la dy i n room 1 , 2 or 3

3 l1 | l 2 | l 3 .

4 %no la dy i n more than 1 room ; t h e p r i n c e s s i s u n iq u e

5 l1 −> −l 2 .

6 l1 −> −l 3 .

7 l2 −> −l 1 .

8 l2 −> −l 3 .

9 l3 −> −l 1 .

10 l3 −> −l 2 .

11

12 %t h e r e ar e 2 t i g e r s in two o f the rooms 1 , 2 or 3

13 t1 & t2 | t2 & t3 | t 1 & t3 .

39

14

15 %no t i g e r in the room where the lad y s t a y s

16 l1 −> −t 1 .

17 l2 −> −t 2 .

18 l3 −> −t 3 .

19 %do we a l s o have to w r i t e t1 −> −l 1 and a l i k e , or do we a l r e a d y have th at ?

20

21 e n d o f l i s t .

22

23 for mu l as ( g o a l s ) .

24 e n d o f l i s t .

Exercise 6.4 We wrote l1 − > -t1 in order to say ”if the lady is in room #1, then there

is no tiger in the first room”. Do we also have to write t1 − > -l1 in order to say ”tiger in